This post will explain how to sort by key and sort by value using HashMap and Comparator.

Step 1: First create a Employee class which is having all the details related to Employee

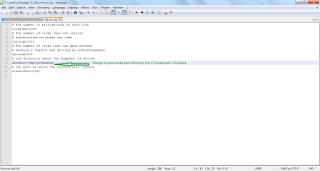

/**

*

* @author rajusiva

*

*/

public class Employee {

Integer empId;

String name;

Float salary;

public Employee(Integer id,String name, Float sal){

this.empId = id;

this.name = name;

this.salary = sal;

}

@Override

public String toString() {

return "Emp Id: "+this.empId+" Name: "+this.name +" salary: " +this.salary;

}

public Integer getEmpId() {

return empId;

}

public void setEmpId(Integer empId) {

this.empId = empId;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public Float getSalary() {

return salary;

}

public void setSalary(Float salary) {

this.salary = salary;

}

}

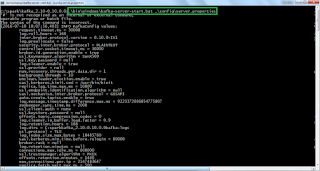

Step 2: Below is the class for sort by key and sort by value using comparator

import java.util.Collections;

import java.util.Comparator;

import java.util.HashMap;

import java.util.Iterator;

import java.util.LinkedHashMap;

import java.util.List;

import java.util.Map;

import java.util.TreeMap;

/** This class is used to custom sort using Comparator interface and overriding compare method.

*

* @author rajusiva

*

*/

public class CustomHashMapSort {

public static void main(String[] args) {

Map map = new HashMap();

map.put("205", new Employee(1, "siva", 75000f));

map.put("202", new Employee(2, "raju", 85000f));

map.put("203", new Employee(3, "kumar", 50000f));

map.put("204", new Employee(4, "arjun", 35000f));

map.put("200", new Employee(5, "neha", 45000f));

map.put("198", new Employee(6, "sneha", 25000f));

Map sortedMap = new TreeMap(map);

for (Iterator iterator = sortedMap.keySet().iterator(); iterator.hasNext();) {

String key = (String) iterator.next();

Employee emp = map.get(key);

System.out.println("Sort By key [" + key +"] [" + emp + "]");

}

System.out.println("=============================================");

HashMap sortedMapByValue = sortByValue(map);

for (Iterator iterator = sortedMapByValue.keySet().iterator(); iterator.hasNext();) {

String key = (String) iterator.next();

Employee emp = map.get(key);

System.out.println("Sort By Value by Name Key-[ "+key +"] value [" + emp.getName() +"]");

}

}

/**This method will used to sort custom object value type(either empId,name, salary)

*

* @param empLoyeeMap of type Map values

* @return sorted hashmap values

*/

public static HashMap sortByValue(Map empLoyeeMap) {

List> list = new java.util.LinkedList>(empLoyeeMap.entrySet());

Collections.sort(list, new Comparator>() {

// sort the value using compare method and comparator

@Override

public int compare(Map.Entry value1, Map.Entry value2) {

return (value1.getValue().getName()).compareTo(value2.getValue().getName());

}

});

HashMap sortedHashMap = new LinkedHashMap();

for (Iterator it = list.iterator(); it.hasNext();) {

Map.Entry entry = (Map.Entry) it.next();

sortedHashMap.put(entry.getKey(), entry.getValue());

}

return sortedHashMap;

}

}

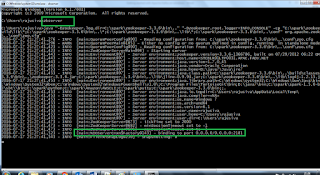

output:

Sort By key [198] [Emp Id: 6 Name: sneha salary: 25000.0] Sort By key [200] [Emp Id: 5 Name: neha salary: 45000.0] Sort By key [202] [Emp Id: 2 Name: raju salary: 85000.0] Sort By key [203] [Emp Id: 3 Name: kumar salary: 50000.0] Sort By key [204] [Emp Id: 4 Name: arjun salary: 35000.0] Sort By key [205] [Emp Id: 1 Name: siva salary: 75000.0] ============================================= Sort By Value by Name Key-[ 204] value [arjun] Sort By Value by Name Key-[ 203] value [kumar] Sort By Value by Name Key-[ 200] value [neha] Sort By Value by Name Key-[ 202] value [raju] Sort By Value by Name Key-[ 205] value [siva] Sort By Value by Name Key-[ 198] value [sneha]

Hope this will help you to understand how we can custom object sort by key and value using comparator.