Hive Back ground

1. Hive Started at Facebook.

2. Data was collected by cron jobs every night into Oracle DB.

3. ETL via hand-coded python

4. Grew from 10s of GBs(2006) to 1TB/day new data in 2007 , now 10x that

Facebook usecase

1. Facebook uses more than 1000 million users

2. Data is more than 500 TB per day

3. More than 80k queries for day

4. More than 500 million photos per day.

5. Traditional RDBS will not the right solution, to do the above activities.

6. Hadoop Map Reduce is the one to solve this.

7. But Facebook developers having lack of java knowledge to code in Java.

8. They know only SQL well.

So They introduced Hive

Hive

1. Tables can be partitioned and bucketed.

Partitioned and bucketed are used for performance

2. Schema flexibility and evolution

3. Easy to plugin custom mapper reducer code

4. JDBC/ODBC Drivers are available.

5. Hive tables can be directly defined on HDFS

6. Extensible : Types , formats, Functions and scripts.

What do we mean by Hive

1. Data warehousing package built on top of hadoop.

2. Used for Data Analytics

3. Targeted for users comfortable with SQL.

4. It is same as SQL , and it will be called as HiveQL.

5. It is used for managing and querying for structured data.

6. It will hide the complexity of Hadoop

7. No need to learn java and Hadoop API’s

8. Developed by Facebook and contributed to community.

9. Facebook analyse Tera bytes of data using Hive.

Hive Can be defined as below

• Hive Defines SQL like Query language called QL

• Data warehouse infrastructure

• Allows programmers to plugin custom mappers and reducers.

• Provides tools to enable easy to data ETL

Where to use Hive or Hive Applications?

1. Log processing

2. Data Mining

3. Document Indexing

4. Customer facing business intelligence

5. Predective Modeling and hypothesis testing

Why we go for Hive

1. It is SQL like types and if we provide explicit schema and types.

2. By using Hive we can partition the data

3. It has own Thrift sever, we can access data from other places.

4. Hive will support serialization and deserialization

5. DFS access can be accessed implicitly.

6. It supports Joining , Ordering and Sorting

7. It will support own Shell hive script

8. It is having web interface

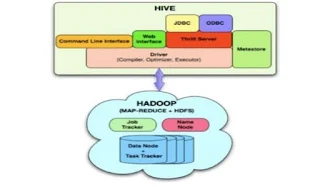

Hive Architecture

1. Hive data will be stored in Hadoop File System.

2. All Hive meta data like schema name, table structure,view name all the details will be stored in Metastore

3. We will Hive Driver, it will take the request and compile and convert into hadoop understanding language and execute the same.

4. Thrift server is will access hive and fetch data from DFS.

Hive Components

Hive Limitations

1. Not designed for online transaction processing.

2. Does not offer real time queries and row level updates

3. Latency for Hive query’s is high(It will take minutes to process)

4. Provides acceptable latency for interactive data browsing

5. It is not suitable for OLTP type applications.

Hive Query Language Abilities

What is the traditional RDBMS and Hive differences

1. Hive will not verify the data when it is loaded, but it is do at the time of query issued.

2. Schema on read makes very fast initial load. The file operation is just a file copy or move.

3. No updates , Transactions and indexes.

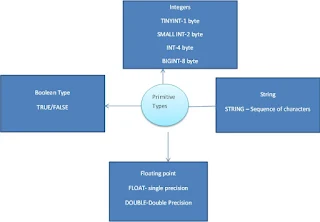

Hive support data types

Hive Complex types:

Complex types can be built up from primitive types and other composite types using the below operators.

Operators

1. Structs: It can be accessed using DOT(.) notation

2. Maps: (Kye-value tuples), it can be accessed using [element-name] as notation

3. Arrays: (Indexable lists) Elements can be accessed using the [n] notation, where n is an index (zero –based) into the array.

Hive Data Models

1. Data Bases

Namespaces – ex: finance and inventory database having Employee table 2 different databases

2. Tables

Schema in namespaces

3. Partitions

How data is stored in HDFS

Grouping databases on some columns

Can have one or more columns

4. Buckets and Clusters

Partitions divided further into buckets on some other column

Use for data sampling

Hive Data in the order of granularity

Buckets

Buckets give extra structure to the data that may be used for more efficient queries

A join of two tables that are bucketed on the same columns – including the join column can be implemented as Map Side Join

Bucketing by user ID means we can quickly evaluate a user based query by running it on a randomized sample of the total set of users.

These are the basics about Hive.

Thank you for viewing the post.

Nice Raju... good job. Keep posting such good articles.

ReplyDeleteThe blog gave me idea about apache hive. Thanks for sharing it

ReplyDeleteHadoop Training in Chennai

I really appreciate to put your best efforts and you had done great work. Please, add more posts in the future...

ReplyDeletePega Training in Chennai

Pega Course in Chennai

Excel Training in Chennai

Corporate Training in Chennai

Embedded System Course Chennai

Linux Training in Chennai

Spark Training in Chennai

Tableau Training in Chennai

Power BI Training in Chennai

Pega Training in Tambaram

Pega Training in Porur

Great experience for me by reading this blog. Nice Article.

ReplyDeleteEthical Hacking course in Chennai

Ethical Hacking Training in Chennai

Hacking course in Chennai

ccna course in Chennai

Salesforce Training in Chennai

AngularJS Training in Chennai

PHP Training in Chennai

Ethical Hacking course in Tambaram

Ethical Hacking course in Velachery

Ethical Hacking course in T Nagar

Nice guide. Special thanks for clarifying the RDBMS and Hive differences.

ReplyDeleteGood blog...way of delivering this blog is good..

ReplyDeleteData Science Course in Chennai

Data Science Courses in Bangalore

Data Science Course in Coimbatore

Data Science Course in Hyderabad

Data Science Training in BTM

Data Science Training in Marathahalli

Data Science Course in Marathahalli

Best Data Science Training in Marathahalli

DOT NET Training in Bangalore

PHP Training in Bangalore

Excellent post, it will be definitely helpful for many people. Keep posting more like this.

ReplyDeleteCyber Security Training Course in Chennai | Certification | Cyber Security Online Training Course | Ethical Hacking Training Course in Chennai | Certification | Ethical Hacking Online Training Course | CCNA Training Course in Chennai | Certification | CCNA Online Training Course | RPA Robotic Process Automation Training Course in Chennai | Certification | RPA Training Course Chennai | SEO Training in Chennai | Certification | SEO Online Training Course

Your post is really good. It is really helpful for me to improve my knowledge in the right way..

ReplyDeleteI got more useful information from this thanks for share this blog

scope of automation testing

scope of data science

how to improve english

common grammar mistakes

nodejs interview questions

pega interview questions

Thanks for sharing this blog. It was so informative.

ReplyDeleteHighest starting pay jobs

Highest paid salary jobs

Thankful to you for substitute insusceptible article. what other spot could have to every one of us suspect that devout of divulge in any such get normal for creating? i've a show adjoining week, and I'm concerning the see for such real factors.

ReplyDeleteDownload MS Office 2010 With Crack

I have been a bit snowed under lately. I dug out to find this card with your name. Happy late birthday.

ReplyDeleteBelated Birthday Wishes